I saw this tweet from Martin that triggered my thinking. I noticed it because the tweet was getting several responses and Martin poses an interesting question, as he usually does.

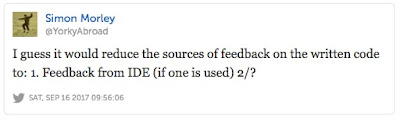

I looked at a few of the replies. The testing community is full of insightful thinkers. These two caught my eye:

Of course, I had to comment on this because it goes to the heart of any hypothesis based and social interaction development.

Then I wondered how the replies were being formulated, and even how they were being received.

Maybe I'm over-loading Martin's original intent, but I'm trying to extend his line of thinking, so bear with me.

Based on Martin and Jari's questions, an approach to analyzing a situation is to consider an item (artefact), activity or set or interactions and consider what would the situation/system look like if you flipped the meaning 180 degrees; an opposite, or antonym. Then look at that resulting situation and consider do you learn anything about the original.

Stumbling block?

The idea of what testing is and isn't and who does what for what purpose, is not clear. That was part of the reason for my "data question" - who is answering from which perspective and how does one know. If this was an anthropological or qualitative analysis study the original question might have been set up differently - but then it might not have gotten the same engagement or attention.

Actually, I think the biggest stumbling block with the replies to the question posed is that the respondents are not indicating what they think of as testing for the "opposite of testing" answer, which is always going to be limited in twitter.

As interesting as many of the replies are, it's difficult for me to use them to understand "testing" or the system of software development from the replies.

That triggered my own thought experiment.

For this experiment, I will not try to define testing directly, but I will use another element in software and product development to understand the potential impact of "no testing". So, the simplistic assumption is that the opposite of "testing" is "no testing" and that this will be observed by looking at other characteristics in a product development lifecycle, in this specific case feedback.

Approach

A system can be analysed by altering a meaning, or purpose, for one of its components and see how the resulting new system might perform. Another way is to remove that component.

Compare to a picture of a group of people - what does the picture tell you about the group of people, their interaction, their context or their potential. Now remove a person or an item. What changes about the whole system or story do you now see (search for photoshopping, green screening or photo manipulation for examples) - e.g. do you see things that were obscured by the now-removed object or person.

Why Feedback?

It is difficult to look at a system of interactions that makes up product development and isolate components, activities and elements of output. For me, feedback is an interaction between people or information about a system or product - it can be internal within the system (company, teams) or external (information received or gleaned from outside the company or teams). Internal feedback - to me - has a value, care and thought attached to information or data points, maybe even discussion or talking points.

Compare, "login doesn't work" to "login doesn't work under circumstances X, Y & Z" or "login performance has changed since version a.b.c". These are all potential forms of feedback - I claim that the second two have more value than the first. A team or product owner can react on all of these, but my claim is that it's easier to make decisions based on #2 & #3. Note, I'm making no claims on who or what generates this type of feedback.

Note, my experience is that good testing generates good feedback in systems of product development, which aid a variety of decisions and analysis - sometimes that comes from individuals and sometimes from systems that individuals have put into place to facilitate feedback and analysis.

And so, riffing on testing being removed from software development and the impact on feedback:

Reflections

There are other aspects of product development that one could look at besides feedback.

The timing is implied in these tweets - the implication is that if it takes longer to get feedback and be able to make a decision about it then you (team, product owner or business manager) miss the opportunity to adjust course, make a different decision, conclude that an experiment doesn't / didn't work and adjust investment, etc.

My implication in these tweets is that testing provides valuable feedback to teams and companies.

Another implication is that feedback is in the system of product development and that ultimately it is a social interaction between individuals.

I do not make a claim that testers own feedback. Good testers can contribute to good feedback systems and delivery.

Extending that: the proximity of feedback to when code is written is vital for hypothesis or experiment-based development.

I think I've probably generated a few question marks that need explanation and extension, but I will leave that to another post.